In this article, let’s take a look on how to setup docker for Libtorch projects. Environment management is an important topic even for machine learning projects, so don’t get caught without a Dockerfile in your project!

A couple of prerequisites:

- You need to have Docker installed. (If you following this guide for work double check licensing or use something like lima to run docker instead)

- Working familiarity with bash. You should know more or less how to run commands in the terminal and how to navigate.

First, Let’s Setup Our Virtual Environment

Whether you are programming in Python, C++, or really any other language, it never hurts to work inside of a virtual environment. Maybe you decide to get a new computer or you just reopen an old project you haven’t touched in a while, you start it up and BOOM, it doesn’t work because of a library version issue. You never know, but in any case, its better to have one than to not have one.

My personal preference is Docker because it allows you to control which OS you use as well as the build steps such as installing libraries. It also has an added bonus of being compatible with tools like Kubernetes and AWS, but don’t worry about that for now.

The Dockerfile

Ok, lets get this tutorial rolling. First, we are going to make a Dockerfile. Open your project directory and create a file called Dockerfile. Note that it does not need an extension like .txt or anything. Then inside that file feel free to copy this.

FROM nvidia/cuda:11.8.0-cudnn8-runtime-ubuntu22.04

RUN apt update && apt -y install cmake

WORKDIR /codeFROMis telling Docker to use the Nvidia/Cuda Ubuntu 22.04 docker image (which may need to download from Docker Hub). It’s an official image created by Nvidia.WORKDIRis saying to make the primary working directory to/code. If you ssh into your container later, you will start out in the/codedirectory.RUNruns certain commands during the Docker build process. In this case, we are runningapt updateand then installingcmakewhich you need to build your Libtorch project.

The DoCKER-COMPOse.yaml File

In addition to a Dockerfile, we need a docker-compose.yaml file. Yeah, two files sounds like a lot to setup an environment, but Docker Compose makes running the build process a lot more simple. Otherwise you would have to add tags for things like volumes to your docker commands which is just messy and easy to forget. This way, all the settings for your build process are stored cleanly in a single file that you can upload to your git repo.

So here is the file.

version: "3.9"

services:

app:

build: .

tty: true

volumes:

- .:/code- The version refers to docker-compose version you are using. 3.9 should be fine for most cases.

- You’ll always need a services section, but the part to take note of is the

appinside ofservices. You can name it anything you want instead ofapp, such asmodelor your project name. Pick something easy to remember because later on when you connect to your container you will use this name in the command. buildtells it to build the project where the docker-compose.yaml file existsttykeeps your container open even after all processes have been run. If you leave this out, your container will shutdown as soon as it is built.volumestells your docker container which directories to add as volumes to your container. A volume is a directory that is accessible by both the container and your local computer. Any change you make to a file in the volume will be reflected both on your local computer and inside of the container.

Once you have both files created, you can just run the following command inside of your project directory to start your container:

docker-compose up -d

The -d tells docker to create the container in the background. If you leave it off it will continue to show the log output from you container, but you won’t be able to write new commands in that terminal window without shutting down the container.

After that you can enter your container with the following command:

docker-compose exec app bash

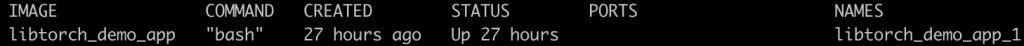

See how I wrote app here? That is because I used the name app in my docker-compose.yaml file. If this command fails, double check to make sure you’re container is actually started by running the docker container ls command. You should see a container with your project name as part of the name.

Now you should be inside of your docker container, which is where we will run all other commands from here on this guide. If you need to get out of your container, you can just type exit and hit enter.

Installing Libtorch and Setting Up Your Cmake File

Installing LIbtorch

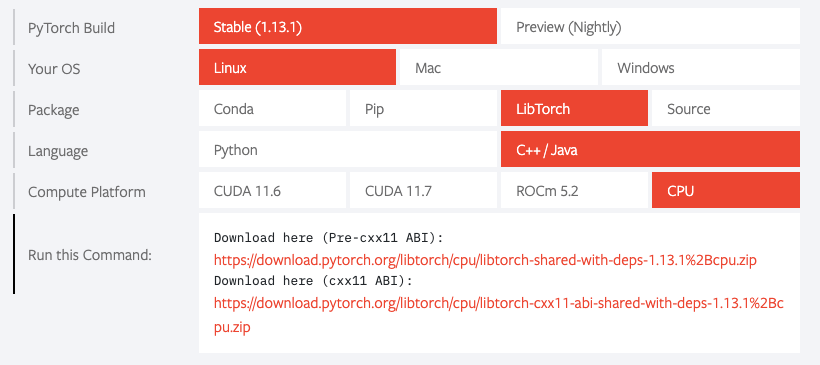

Now that we have a docker container, go to the PyTorch website and download the Libtorch zip file. Remember that gcc is a linux based image, so to use libtorch inside your container, you will need the linux version.

Go ahead and grab the cxx11 ABI version. Move it to your project and unzip it. I personally put it inside of a folder named include.

Setting up Your CMake File

At this point you should have:

- A directory named

includethat contains thelibtorchdirectory. - A Dockerfile

- A docker-compose.yaml file

Next we are going to add a CMakeLists.txt file. This file is going to be somewhat based on the official installation guide with some slight differences. Go ahead and copy this into your own CMakeLists.txt file:

cmake_minimum_required(VERSION 3.0 FATAL_ERROR)

project(demo)

list(APPEND CMAKE_PREFIX_PATH "${CMAKE_SOURCE_DIR}/include/libtorch")

find_package(Torch REQUIRED)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} ${TORCH_CXX_FLAGS}")

add_executable(demo src/demo.cpp)

target_link_libraries(demo "${TORCH_LIBRARIES}")

set_property(TARGET demo PROPERTY CXX_STANDARD 14)Here, I am calling my project demo but you can use whatever name you want. Just remember to change all the other parts that say demo to whatever name you choose.

The big difference here between my version and the official guide version is the list(APPEND CMAKE_PREFIX_PATH "${CMAKE_SOURCE_DIR}/include/libtorch") line. In the official guide they tell you to run cmake with a -DCMAKE_PREFIX_PATH flag, but I personally think this is hard to remember so I added it inside the file instead.

Creating your Project

My primary code file is the demo.cpp which we now need to make. First, make a new directory named src and inside of it create the demo.cpp file. This matches the way we wrote it in the CMakeLists.txt file above.

To start with, just grab the code from the official guide and throw that into your demo.cpp file.

#include <torch/torch.h>

#include <iostream>

int main() {

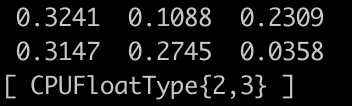

torch::Tensor tensor = torch::rand({2, 3});

std::cout << tensor << std::endl;

}Building and Running Your Project

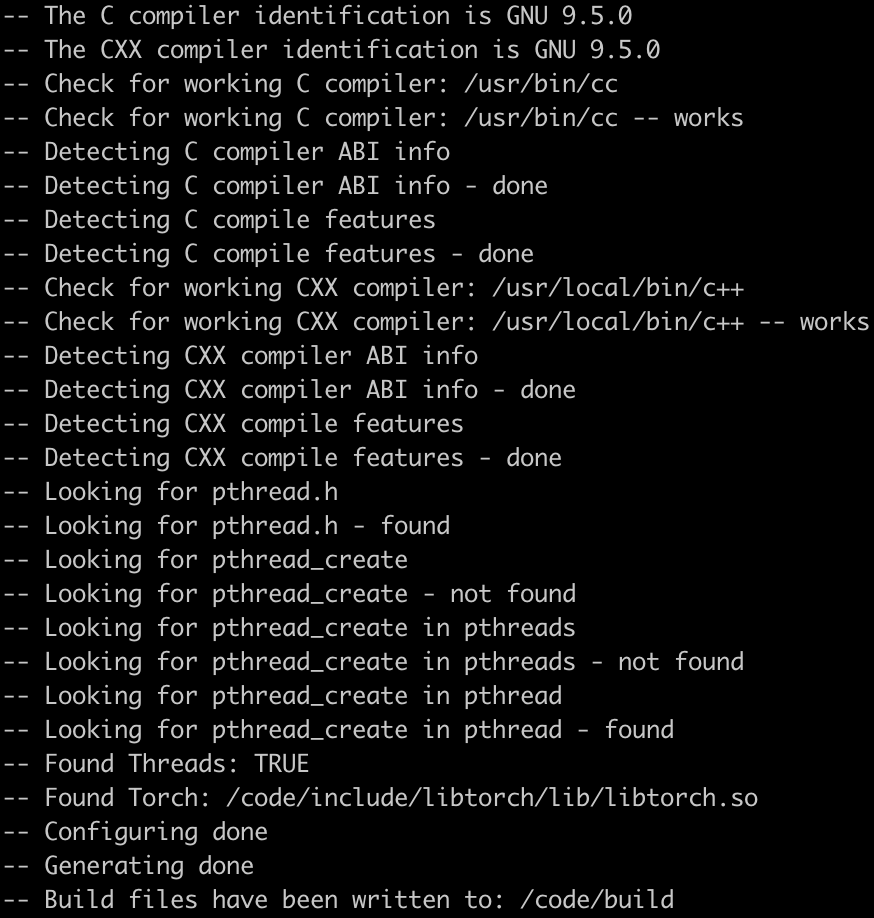

Ok, now we have a docker environment, a demo file and CMakeLists.txt that describes how cmake should build our project. The final step should be to build the project. Here is the first command to run:

cmake -S . -B build

This configures cmake and creates a build directory which is where the build project will be output to.

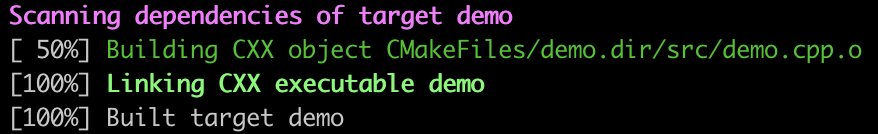

After that, we run the build command:

cmake --build build

This runs the actual build process and outputs your built project to the build directory. You will need to re-run this command any time you change any of your source code.

Finally, our project is built! So now we can run it. Just use the following command to run it:

build/demo

If you used the demo code, then you should see a tensor printed out in the terminal, which will include today’s guide.

Conclusion

Once you have this set up, you can reuse the docker environment and CMakeLists.txt file for other libtorch projects as well! If you start breaking your project into multiple files, you will likely need to make additional CMakeLists.txt files or add to your current one, but I hope to cover that more in upcoming posts. For now, play around with this some more and maybe try running the code from my “Getting Started” post.

If you have questions or requests for things to write about, feel free to leave me a comment!

Want to read more stuff on libtorch? Check out some of my other articles!

Leave a Reply

You must be logged in to post a comment.

Connect with

Login with Linkedin